So, in other words: Each criteria has a min and max score, as well as a weight to set importance. Total_rating = 0 max_rating = len(ratings) * 5 for k in ratings: index( min(vals, key = lambda x: abs(x -val))) * self. However your specific criteria look like, the logic I used to determine the relative score of an area is based on the following script:ĭef _init_( self, min_v, max_v, weight = 1, order = 'asc'): Historic Savannah: Beautiful, far away, and expensive! At the same time, an area that is close to everything, but has an average price of $500/sqft isn’t desireable for me neither. Travel Time & Distance to work/gym/familyįor instance, the most beautiful and affordable neighborhood in the state wouldn’t do me any good if it means driving 4hrs one way to any point of interest that I frequent (such as Savannah, GA - one of the most gorgeous places in the state in my book, but a solid 4hr drive from Atlanta).This, of course, is a very subjective process for the purpose of this article, I’ll refrain from listing our specific criteria, and rather talk about high level categories, such as: So, one thing even a normal person might do is to establish deterministic criteria and their relative importance for comparing different options. The overarching goal for the Data Lake we’re about to build is to find a new neighborhood that matches our criteria, and those criteria will be more detailed than e.g. We then go through the build phases: 1) Infrastructure, 2) Ingestion, 3) Transformations with Spark, and finally, the 4) Analysis & Rating.Once we understand the data, we can design a Data Lake with logical and physical layers and the tech for it.Then, we explore potential Data Sources that can help us get there.First, we’ll talk about the end goal: Rating neighborhoods using a deterministic approach.In order to make this happen, here’s what we’ll talk about:

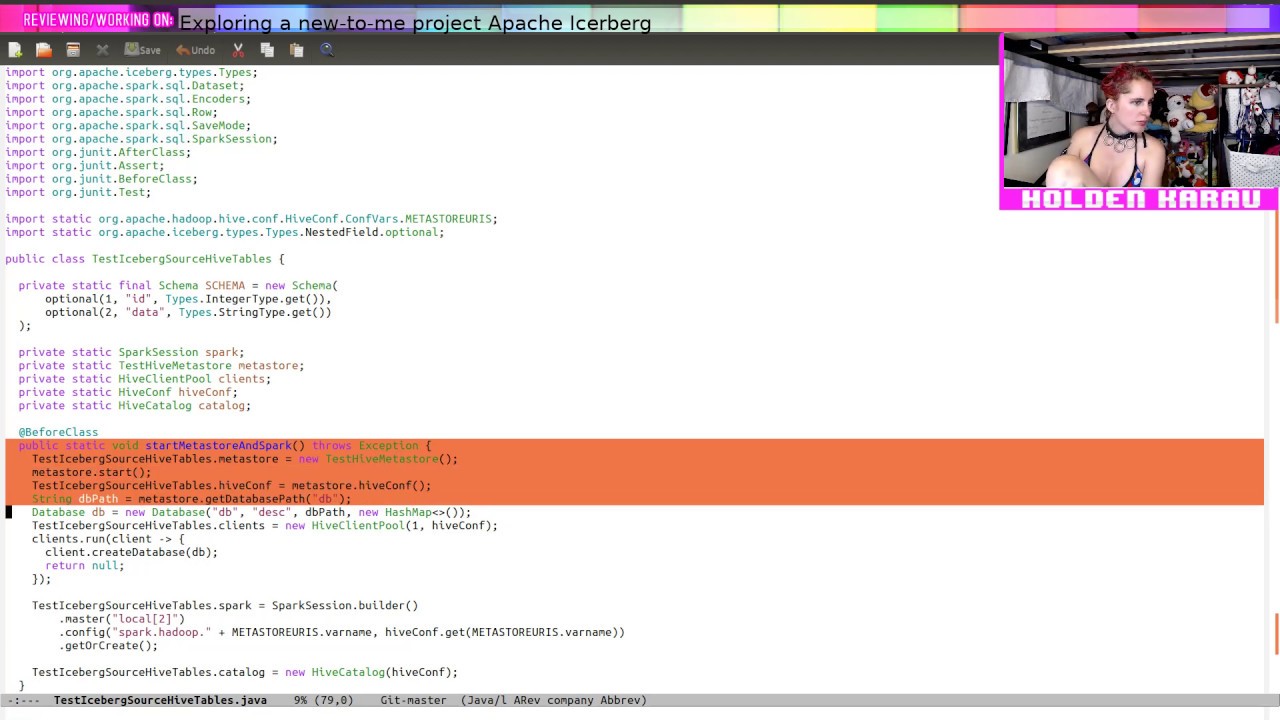

#Apache iceberg example full#

Different types of analytics on your data like SQL queries, big data analytics, full text search, real-time analytics, and machine learning can be used to uncover insights. This means you can store all of your data without careful design or the need to know what questions you might need answers for in the future. The structure of the data or schema is not defined when data is captured. You can store your data as-is, without having to first structure the data, and run different types of analytics-from dashboards and visualizations to big data processing, real-time analytics, and machine learning to guide better decisions.Ī data lake is different, because it stores relational data from line of business applications, and non-relational data from mobile apps, IoT devices, and social media. I’ll refer to the team over at AWS to explain what a Data Lake is and why I need one at home:Ī data lake is a centralized repository that allows you to store all your structured and unstructured data at any scale. Join me on this journey of pain and suffering, because we’ll be setting up an entire cluster locally to do something a web search can probably achieve much faster! On Data Lakes We’ll be talking about data and the joys of geospatial inconsistencies in the US, about tech and why setting up Spark and companions is still a nightmare, and a little bit about architecture and systems design, i.e. So I build a Mini Data Lake to query demographic and geographic data for finding a new neighborhood to buy a house in, using Spark and Apache Iceberg. In order to narrow that search area down, the first thing that came to mind was not “I should call our realtor!”, but rather “Hey, has the 2020 Census data ever been released?”. The entire Atlanta Metro area is about 8,400sqmi, which is larger than the entirety of Slovenia. Our search area was roughly 1,400sqmi, which is larger than the entire country of Luxembourg. See, the thing is, this country - the US, that is - is pretty big. So, when my SO and I started looking to move out of the city into a different, albeit somewhat undefined area, the first question was: Where do we even look? We knew that we wanted more space and more greenery and the general direction, but that was about it.

Writing a Telegram Bot to control a Raspberry Pi from afar (to observe Guinea Pigs) might give you an idea with regards to what I’m talking about. I’m not exactly known as the most straightforward person when it comes to using tech at home to solve problems nobody ever had. Calculating Travel Times & Geo-Matching.Cleaning up Tables & Migrating to Iceberg.

The 1st Build Phase: Setting up Infrastructure.Building a Data Lake with Spark and Iceberg at Home to over-complicate shopping for a House Contents

0 kommentar(er)

0 kommentar(er)